docker端口映射与容器互访 |

您所在的位置:网站首页 › docker 容器端口 › docker端口映射与容器互访 |

docker端口映射与容器互访

|

在实践中,经常会碰到需要多个服务组件容器(lnmp)共同协作的情况,这往往需要多个容器之间能够互相访问到对方的服务。 可以通过以下两种方式来是想容器互联互通: 1 端口映射实现容器访问 通过-p参数来指定端口映射。 当用-P(大写)标记时,docker会随机映射一个端口到内部容器开放的网络端口 2 互联机制实现互访 使用--link 参数可以让容器之间进行互联 端口映射实现容器访问1: -P(大写)随机端口映射,默认映射到所有地址0.0.0.0 [root@server01 ~]# docker run -d -P --name mynginx --rm nginx 5c7f907176712c9c175f055ef375ed5bd140d8700a823131b3c15c35b1346448 [root@server01 ~]# docker ps -a CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 5c7f90717671 nginx "nginx -g 'daemon of…" 8 seconds ago Up 4 seconds 0.0.0.0:32768->80/tcp mynginx2 -p 映射到指定地址的指定端口 [root@server01 ~]# docker run -d -p 192.168.1.10:80:80 --name mynginx nginx 0fde3f18a5d70ff8a6c34754e0cbe971a6290fe28b433da0d040b1e8759c9e3c [root@server01 ~]# docker ps -a CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 0fde3f18a5d7 nginx "nginx -g 'daemon of…" 4 seconds ago Up 2 seconds 192.168.1.10:80->80/tcp mynginx3 查看端口映射情况 [root@server01 ~]# docker port mynginx 80/tcp -> 192.168.1.10:80互联机制实现互访 1 创建一个数据库容器 [root@server01 ~]# docker run -d --name db -e MYSQL_ROOT_PASSWORD=123456 mysql 83cf1e76e7087e10aa7f7abf1c1854a054669fdf1440e4c022a1223c25f5326a [root@server01 ~]# docker ps -a CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 83cf1e76e708 mysql "docker-entrypoint.s…" 2 seconds ago Up 2 seconds 3306/tcp, 33060/tcp db2 开启一个web容器,并将它连接到db容器 vim Dockerfile FROM centos:7 RUN yum -y install epel-release && yum -y install nginx CMD ["/usr/sbin/nginx", "-g","daemon off;"]docker build -t myweb:v1 . --link name:alias 其中 name是要链接的容器名称,alias是别名 [root@server01 ~]# docker run -d --name web --link db:db myweb:v1 2fd412677f62e023581ac7961f43b2cfb4c89c68461b8ce35fe36ff2afd054ff [root@server01 ~]# docker ps -a CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES 2fd412677f62 nginx "nginx -g 'daemon of…" 5 seconds ago Up 5 seconds 80/tcp webdocker相当于在两个互联的容器之间创建了一个虚拟通道,而且不用映射他们的端口到宿主机上。避免了暴露数据库端口到外部网络 3 docker刚过两种方式为容器公开链接信息: 3.1 更新/etc/hosts文件 [root@server01 ~]# docker exec -it web /bin/bash root@2fd412677f62:/# cat /etc/hosts 127.0.0.1 localhost ::1 localhost ip6-localhost ip6-loopback fe00::0 ip6-localnet ff00::0 ip6-mcastprefix ff02::1 ip6-allnodes ff02::2 ip6-allrouters 172.17.0.2 db 83cf1e76e708 172.17.0.3 2fd412677f623.2 更新环境变量 其中DB_开头的环境变量是供web容器链接db容器使用的 [root@server01 ~]# docker exec -it web /bin/bash root@2fd412677f62:/# env DB_PORT_33060_TCP_ADDR=172.17.0.2 DB_PORT_3306_TCP_PORT=3306 HOSTNAME=2fd412677f62 PWD=/ DB_PORT_33060_TCP_PORT=33060 DB_PORT_3306_TCP_ADDR=172.17.0.2 DB_PORT=tcp://172.17.0.2:3306 DB_PORT_3306_TCP_PROTO=tcp PKG_RELEASE=1~buster HOME=/root DB_PORT_33060_TCP=tcp://172.17.0.2:33060 DB_ENV_MYSQL_MAJOR=8.0 DB_ENV_MYSQL_VERSION=8.0.19-1debian10 DB_PORT_33060_TCP_PROTO=tcp NJS_VERSION=0.3.9 TERM=xterm SHLVL=1 DB_ENV_GOSU_VERSION=1.7 DB_PORT_3306_TCP=tcp://172.17.0.2:3306 DB_NAME=/web/db PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin NGINX_VERSION=1.17.9 _=/usr/bin/env 3.3 登录到web容器验证与db容器互联 [root@76d52e07299f /]# telnet 172.17.0.2 3306 Trying 172.17.0.2... Connected to 172.17.0.2. Escape character is '^]'. J 2ÿEYa_]d0%Xcaching_sha2_password 了解docker网络启动过程:1: docker服务启动时会在主机上自动创建一个docker0的虚拟网桥。 网桥可以理解为一个软件交换机,负责为挂载在网桥上的接口之间进行数据包的转发[root@server01 ~]# ifconfig docker0: flags=4163 mtu 1500 inet 172.17.0.1 netmask 255.255.0.0 broadcast 172.17.255.255 inet6 fe80::42:ffff:fe1b:98ca prefixlen 64 scopeid 0x20 ether 02:42:ff:1b:98:ca txqueuelen 0 (Ethernet) RX packets 11564 bytes 639940 (624.9 KiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 11818 bytes 31513043 (30.0 MiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

2:docker随机分配一个bending未占用的私有地址给docker0接口(172.17/0/0/16,掩码为255.255.0.0) 启动的容器内的网卡也会自动分配一个该网段的地址。 [root@server01 ~]# docker inspect -f {{.NetworkSettings.IPAddress}} db 172.17.0.2 [root@server01 ~]# docker inspect -f {{.NetworkSettings.IPAddress}} web 172.17.0.3

3:创建一个docker容器的时候,同时会创建了一对veth pair 互联接口。 互连接口的一端位于容器内,即eth0,另一端在本地并被挂载到docker0网桥,名称已veth开头。 web容器内部网络信息: [root@76d52e07299f /]# ifconfig eth0: flags=4163 mtu 1500 inet 172.17.0.3 netmask 255.255.0.0 broadcast 172.17.255.255 ether 02:42:ac:11:00:03 txqueuelen 0 (Ethernet) RX packets 219 bytes 396248 (386.9 KiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 286 bytes 19282 (18.8 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 lo: flags=73 mtu 65536 inet 127.0.0.1 netmask 255.0.0.0 loop txqueuelen 1000 (Local Loopback) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 0 bytes 0 (0.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 [root@76d52e07299f /]# yum -y install net-tools宿主机网络信息: veth62aa061: flags=4163 mtu 1500 inet6 fe80::7012:91ff:feee:9980 prefixlen 64 scopeid 0x20 ether 72:12:91:ee:99:80 txqueuelen 0 (Ethernet) RX packets 286 bytes 19282 (18.8 KiB) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 219 bytes 396248 (386.9 KiB) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0通过这种方式主机可以与容器通信,容器之间也可以互相通信! 手动配置docker网络 1 启动一个不加载网络的容器box1 [root@server01 src]# docker run -it --privileged=true --net=none --name box1 busybox:latest /bin/sh / #2 在本地主机查找容器的进程ID,并为他创建网络命名空间: [root@server01 src]# docker inspect -f {{.State.Pid}} box1 1118281102 pid=$(docker inspect -f {{.State.Pid}} box1) 1103 echo $pid 1104 mkdir -p /var/run/netns/ 1105 ln -s /proc/$pid/ns/net /var/run/netns/$pid 3 检查桥接网卡的ip和子网掩码信息 [root@server01 src]# ip addr show docker0 3: docker0: mtu 1500 qdisc noqueue state DOWN group default link/ether 02:42:ff:1b:98:ca brd ff:ff:ff:ff:ff:ff inet 172.17.0.1/16 brd 172.17.255.255 scope global docker0 valid_lft forever preferred_lft forever inet6 fe80::42:ffff:fe1b:98ca/64 scope link valid_lft forever preferred_lft forever4 创建一对“”veth pair“” 接口A和B 绑定A接口道网桥docker0,并启用它: 1107 ip addr show docker0 1108 ip link add A type veth peer name B 1111 yum install bridge-utils 1112 brctl addif docker0 A 1113 ip link set A up 1114 ifconfig5 将B接口放到容器的网络命名空间,命名为eth0,启动它并配置一个可用ip(桥接网段)和默认网关 [root@server01 src]# ip link set B netns $pid [root@server01 src]# ip netns exec $pid ip link set dev B name eth0 [root@server01 src]# ip netns exec $pid ip link set eth0 up [root@server01 src]# ip netns exec $pid ip addr add 172.17.0.110/16 dev eth0 [root@server01 src]# ip netns exec $pid ip route add default via 172.17.0.16 登录容器box验证 [root@server01 ~]# docker run -it --privileged=true --net=none --name box1 busybox:latest /bin/sh / # ip a 1: lo: mtu 65536 qdisc noqueue qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever 120: eth0@if121: mtu 1500 qdisc noqueue qlen 1000 link/ether 56:b3:c4:48:3a:ea brd ff:ff:ff:ff:ff:ff inet 172.17.0.110/16 scope global eth0 valid_lft forever preferred_lft forever / # ping baidu.com PING baidu.com (39.156.69.79): 56 data bytes 64 bytes from 39.156.69.79: seq=0 ttl=48 time=37.637 ms 64 bytes from 39.156.69.79: seq=1 ttl=48 time=37.520 ms使用OpenvSwitch网桥 docker默认使用的是linux自带的网桥docker0实现互联互通,可以替换为功能更强大的Openv-Switch虚机交换机实现 关闭selinux setenforce 0 安装依赖包 [root@server01 ~]# yum -y install rpm-build openssl-devel1 安装OpenvSwith 1.1 获取安装文件 wget http://openvswitch.org/releases/openvswitch-2.3.1.tar.gz1.2 添加用户ovs [root@server01 src]# useradd ovs useradd:用户“ovs”已存在 [root@server01 src]# id ovs uid=1001(ovs) gid=1001(ovs) 组=1001(ovs)1.3 切换用户 su - ovs [ovs@server01 SOURCES]$ pwd/home/ovs/rpmbuild/SOURCES mkdir -p rpmbuild/SOURCES cp /usr/local/src/openvswitch-2.3.1.tar.gz rpmbuild/SOURCES/ cd rpmbuild/SOURCES/ tar zxvf openvswitch-2.3.1.tar.gz1.4 修改文件 [ovs@server01 SOURCES]$ sed 's/openvswitch-kmod, //g' openvswitch-2.3.1/rhel/openvswitch.spec > openvswitch-2.3.1/rhel/openvswitch_no_kmod.spec1.5 生成rpm文件 [ovs@server01 SOURCES]$ rpmbuild -bb --nocheck openvswitch-2.3.1/rhel/openvswitch_no_kmod.spec 当出现以下信息说明生成rpm文件成功 + umask 022 + cd /home/ovs/rpmbuild/BUILD + cd openvswitch-2.3.1 + rm -rf /home/ovs/rpmbuild/BUILDROOT/openvswitch-2.3.1-1.x86_64 + exit 01.6 安装rpm包 yum localinstall /home/ovs/rpmbuild/RPMS/x86_64/openvswitch-2.3.1-1.x86_64.rpm1.7 检查命令行工具是否准备就绪 [root@server01 x86_64]# openvt -V openvt 来自 kbd 1.15.51.8 启动服务 [root@server01 x86_64]# /etc/init.d/openvswitch start Starting openvswitch (via systemctl): [ OK ] [root@server01 x86_64]# /etc/init.d/openvswitch status ovsdb-server is running with pid 87587 ovs-vswitchd is running with pid 87197 [root@server01 x86_64]#

3. 配置容器连接到OpenvSwitch网桥 3.1 ovs创建br0,并启动两个不加载网络的docker容器(box1,box2) --net=none 表示运行时不创建网络 --privileged=true 表示在容器内可以获取一些扩展权限。 [root@server01 ~]# ovs-vsctl add-br br0 [root@server01 ~]# ip link set br0 up

[root@server01 src]# ifconfig br0: flags=4163 mtu 1500 inet6 fe80::5057:5bff:fef7:9c48 prefixlen 64 scopeid 0x20 ether 52:57:5b:f7:9c:48 txqueuelen 1000 (Ethernet) RX packets 0 bytes 0 (0.0 B) RX errors 0 dropped 0 overruns 0 frame 0 TX packets 7 bytes 586 (586.0 B) TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 [root@server01 ~]# docker run -it --privileged=true --net=none --name box1 busybox:latest /bin/sh Unable to find image 'busybox:latest' locally latest: Pulling from library/busybox 0669b0daf1fb: Pull complete Digest: sha256:b26cd013274a657b86e706210ddd5cc1f82f50155791199d29b9e86e935ce135 Status: Downloaded newer image for busybox:latest / # [root@server01 src]# docker run -it --privileged=true --net=none --name box2 busybox:latest /bin/sh / #

3.2 手动为容器添加网络 下载openvswitch 项目提供的支持docker容器的辅助脚本 ovs-docer wget https://github.com/openvswitch/ovs/raw/master/utilities/ovs-docker3.3 将容器关联网桥br0,并设置ip、vlan [root@server01 src]# ./ovs-docker add-port br0 eth0 box1 --ipaddress=10.0.0.2/24 --gateway=10.0.0.1 [root@server01 src]# ./ovs-docker set-vlan br0 eth0 box1 5 [root@server01 src]# ./ovs-docker add-port br0 eth0 box2 --ipaddress=10.0.0.3/24 --gateway=10.0.0.1 [root@server01 src]# ./ovs-docker set-vlan br0 eth0 box2 5 [root@server01 src]#添加成功后,在容器内查看网络信息,多了一个etho的网卡 / # ip a 1: lo: mtu 65536 qdisc noqueue qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever 108: eth0@if109: mtu 1500 qdisc noqueue qlen 1000 link/ether 3e:b7:2d:6a:b7:44 brd ff:ff:ff:ff:ff:ff inet 10.0.0.1/24 scope global eth0 valid_lft forever preferred_lft forever

此时测试容器的互通性,两容器相互ping测试 在box1终端ping box2容器 / # ping 10.0.0.2 PING 10.0.0.2 (10.0.0.2): 56 data bytes 64 bytes from 10.0.0.2: seq=0 ttl=64 time=0.949 ms 64 bytes from 10.0.0.2: seq=1 ttl=64 time=0.109 ms

3.4 设置br0地址为网关地址 [root@server01 src]# ip addr add 10.0.0.1/24 dev br0此时 在box1容器内 ping网关,ping box2 ping宿主机 都是通的 / # ping 10.0.0.1 PING 10.0.0.1 (10.0.0.1): 56 data bytes 64 bytes from 10.0.0.1: seq=0 ttl=64 time=1.136 ms 64 bytes from 10.0.0.1: seq=1 ttl=64 time=0.752 ms ^C --- 10.0.0.1 ping statistics --- 2 packets transmitted, 2 packets received, 0% packet loss round-trip min/avg/max = 0.752/0.944/1.136 ms / # ping 10.0.0.2 PING 10.0.0.2 (10.0.0.2): 56 data bytes 64 bytes from 10.0.0.2: seq=0 ttl=64 time=0.083 ms / # ping 192.168.1.10 PING 192.168.1.10 (192.168.1.10): 56 data bytes 64 bytes from 192.168.1.10: seq=0 ttl=64 time=0.864 ms 64 bytes from 192.168.1.10: seq=1 ttl=64 time=0.111 ms ^C --- 192.168.1.10 ping statistics --- 2 packets transmitted, 2 packets received, 0% packet loss round-trip min/avg/max = 0.111/0.487/0.864 ms

遗留问题:容器无法上外网! 构建跨主机容器网络在可以互相访问的多个宿主机之间搭建隧道,让容器可以跨主机互访! 涉及到跨主机的网络就需要使用docker自带的overlay network或者第三方的网络插件, 要想使用Docker原生Overlay网络,需要满足以下任意条件: 1、Docker运行在Swarm模式 2、使用键值存储的Docker主机集群 本次部署使用键值存储的Docker主机集群,需要满足以下条件: 1. 集群中主机连接到键值存储,Docker支持Consul、Etcd和Zookeeper; 2. 集群中主机运行一个Docker守护进程; 3. 集群中主机必须具有唯一的主机名,因为键值存储使用主机名来标识集群成员; 4. 集群中Linux主机内核版本3.12+,支持VXLAN数据包处理,否则可能无法通信。 5. Docker通过overlay网络驱动程序支持多主机容器网络通信。

修改两个测试端主机名,并退出终端生效 [root@overlay01 ~]# hostnamectl set-hostname overlay01 [root@overlay02 ~]# hostnamectl set-hostname overlay02

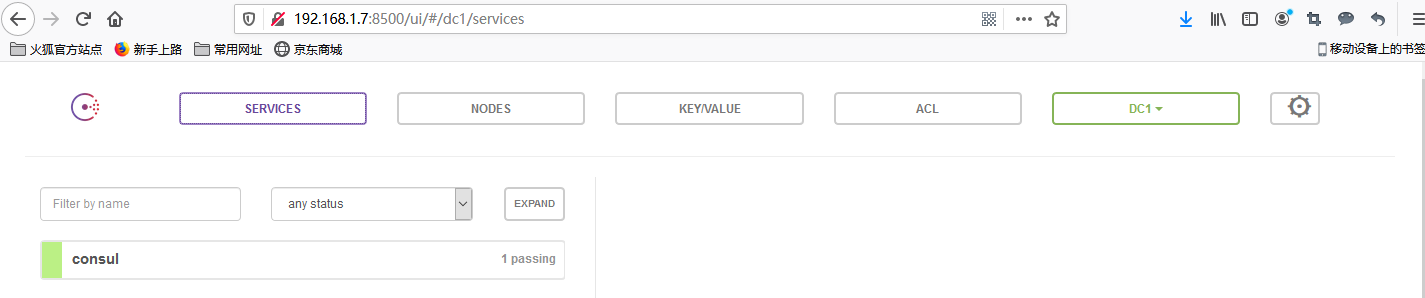

1 配置网络信息管理数据库 consul服务搭建Docker跨主机通信需要key value的服务来保存网络的信息,有很多可以选择的服务,如consul,etcd,zookeeper等都可以,本文是以官方推荐的consul服务作为key value的服务。 docker run -d --restart="always" --publish="8500:8500" --hostname="consul" --name="consul" index.alauda.cn/sequenceiq/consul:v0.5.0-v6 -server -bootstrap国外的镜像拉取很慢,选用了国内的灵雀云作为服务。 2 配置主机的docker服务的启动项(1.7和1.8都要配置) 1.--cluster-store=consul://192.168.1.7:8500 #内网的IP地址加上consul的端口2.--cluster-advertise=192.168.1.7:2376 #以守护进程方式启动 3:-H unix:///var/run/docker.sock 没有这一行docker 客户端命令都不能用 ! 1.7的配置 /lib/systemd/system/docker.service [Service] Type=notify # the default is not to use systemd for cgroups because the delegate issues still # exists and systemd currently does not support the cgroup feature set required # for containers run by docker #ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock ExecStart=/usr/bin/dockerd -H tcp://0.0.0.0:2376 -H unix:///var/run/docker.sock --cluster-store=consul://192.168.1.7:8500 --cluster-advertise=192.168.1.7:2376 ExecReload=/bin/kill -s HUP $MAINPID TimeoutSec=0 RestartSec=2 Restart=always1.8的配置 /lib/systemd/system/docker.service [Service] Type=notify # the default is not to use systemd for cgroups because the delegate issues still # exists and systemd currently does not support the cgroup feature set required # for containers run by docker #ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock ExecStart=/usr/bin/dockerd -H tcp://0.0.0.0:2376 -H unix:///var/run/docker.sock --cluster-store=consul://192.168.1.7:8500 --cluster-advertise=192.168.1.8:2376 ExecReload=/bin/kill -s HUP $MAINPID TimeoutSec=0 RestartSec=2 Restart=always重启docker服务 systemctl daemon-reload systemctl restart docker验证: [root@overlay02 ~]# ps aux|grep docker root 6830 2.5 7.4 455508 74360 ? Ssl 14:22 0:00 /usr/bin/dockerd -H tcp://0.0.0.0:2376 -H unix:///var/run/docker.sock --cluster-store=consul://192.168.1.7:8500 --cluster-advertise=192.168.1.8:2376 root 6985 0.0 0.0 112728 976 pts/0 S+ 14:22 0:00 grep --color=auto docker

3 创建overlay网络使用命令创建overlay类别的网络 [root@overlay01 ~]# docker network create -d overlay wg 00651f61204bc1e464c8722b96df34c586d76cccbf716c6129be9f87440eb629 [root@overlay01 ~]# docker network ls NETWORK ID NAME DRIVER SCOPE 21405694cf9d bridge bridge local 718808af2e24 host host local 239cb40d03a6 none null local 00651f61204b wg overlay global [root@overlay01 ~]# 4 测试网络 分别在1.7(box1)和1.8(box2)上创建一个容器 [root@overlay01 ~]# docker run -it --net=wg --name box1 busybox:latest /bin/sh Unable to find image 'busybox:latest' locally latest: Pulling from library/busybox 0669b0daf1fb: Pull complete Digest: sha256:b26cd013274a657b86e706210ddd5cc1f82f50155791199d29b9e86e935ce135 Status: Downloaded newer image for busybox:latest / # ip a 1: lo: mtu 65536 qdisc noqueue qlen 1000 link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00 inet 127.0.0.1/8 scope host lo valid_lft forever preferred_lft forever 32: eth0@if33: mtu 1450 qdisc noqueue link/ether 02:42:0a:00:00:02 brd ff:ff:ff:ff:ff:ff inet 10.0.0.2/24 brd 10.0.0.255 scope global eth0 valid_lft forever preferred_lft forever 35: eth1@if36: mtu 1500 qdisc noqueue link/ether 02:42:ac:12:00:02 brd ff:ff:ff:ff:ff:ff inet 172.18.0.2/16 brd 172.18.255.255 scope global eth1 valid_lft forever preferred_lft forever / # ping baidu.com PING baidu.com (39.156.69.79): 56 data bytes 64 bytes from 39.156.69.79: seq=0 ttl=48 time=37.749 ms10.0.0.3 为容器box2的ip地址 / # ping 10.0.0.3 PING 10.0.0.3 (10.0.0.3): 56 data bytes 64 bytes from 10.0.0.3: seq=0 ttl=64 time=1.704 ms 64 bytes from 10.0.0.3: seq=1 ttl=64 time=0.667 ms

=============================== consul 安装完会有一个web界面,有兴趣的可以研究下!

|

【本文地址】

今日新闻 |

推荐新闻 |